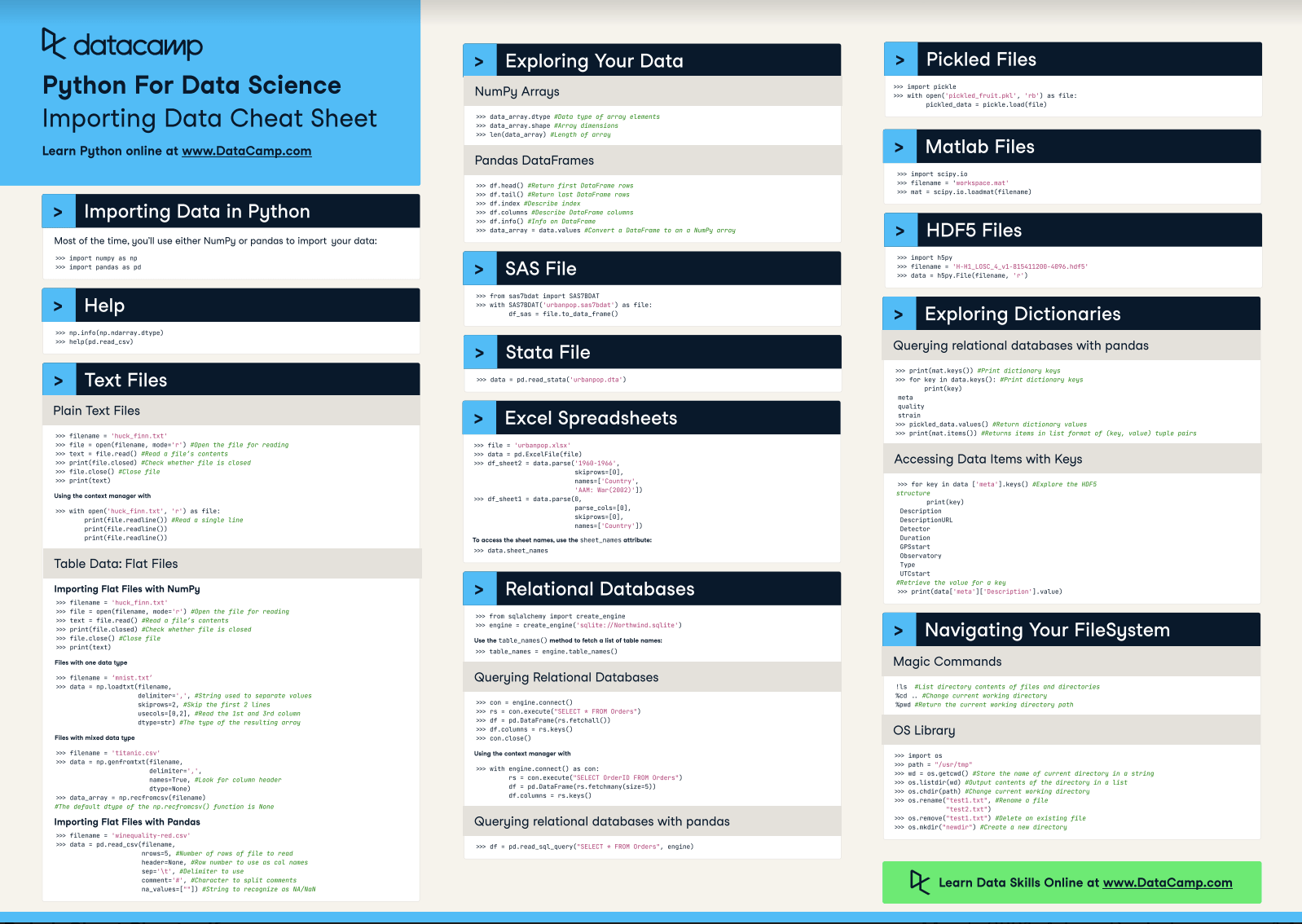

Importing Data in Python Cheat Sheet

With this Python cheat sheet, you'll have a handy reference guide to importing your data, from flat files to files native to other software and relational databases.

Jun 2021 · 5 min read

RelatedSee MoreSee More

tutorial

Everything You Need to Know About Python's Maximum Integer Value

Explore Python's maximum integer value, including system limits and the sys.maxsize attribute.

Amberle McKee

5 min

tutorial

Python KeyError Exceptions and How to Fix Them

Learn key techniques such as exception handling and error prevention to handle the KeyError exception in Python effectively.

Javier Canales Luna

6 min

tutorial

Snscrape Tutorial: How to Scrape Social Media with Python

This snscrape tutorial equips you to install, use, and troubleshoot snscrape. You'll learn to scrape Tweets, Facebook posts, Instagram hashtags, or Subreddits.

Amberle McKee

8 min

tutorial

Troubleshooting The No module named 'sklearn' Error Message in Python

Learn how to quickly fix the ModuleNotFoundError: No module named 'sklearn' exception with our detailed, easy-to-follow online guide.

Amberle McKee

5 min

tutorial

AWS Storage Tutorial: A Hands-on Introduction to S3 and EFS

The complete guide to file storage on AWS with S3 & EFS.

Zoumana Keita

16 min

code-along

Getting Started With Data Analysis in Alteryx Cloud

In this session, you'll learn how to get started with the Alteryx AI Platform by performing data analysis using Alteryx Designer Cloud.

Joshua Burkhow